Designing Automation for High-Accountability Startup Environments

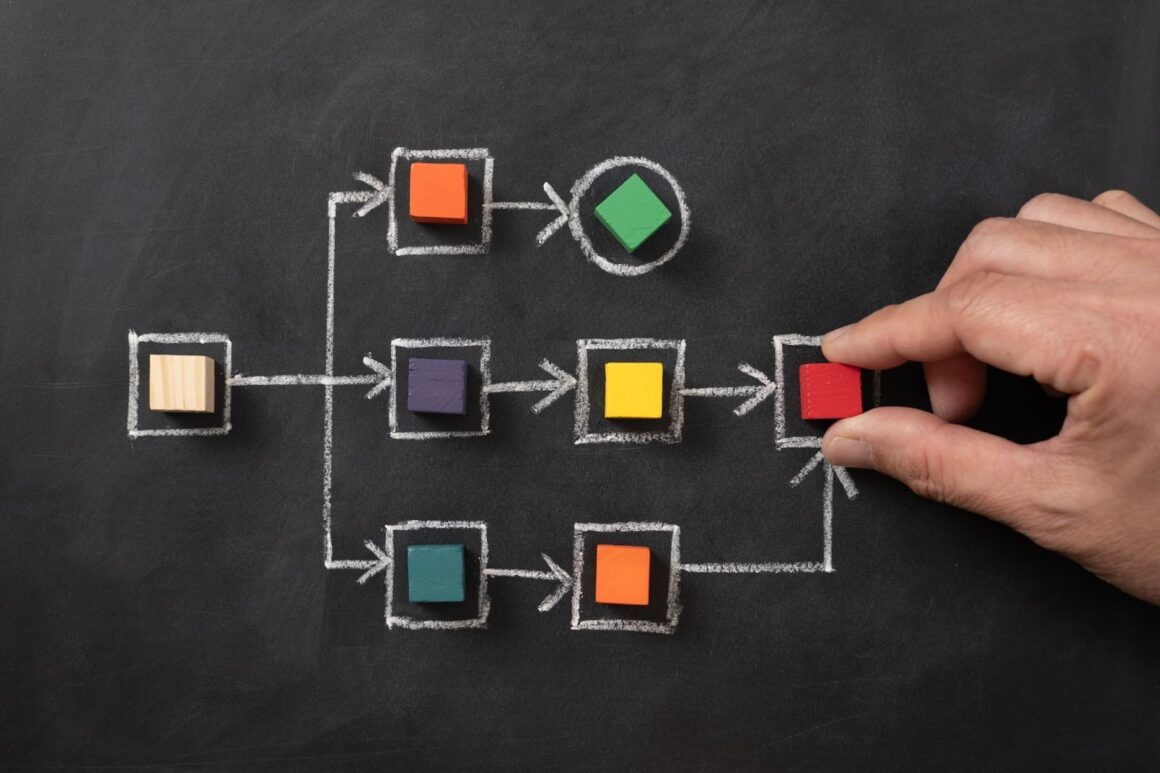

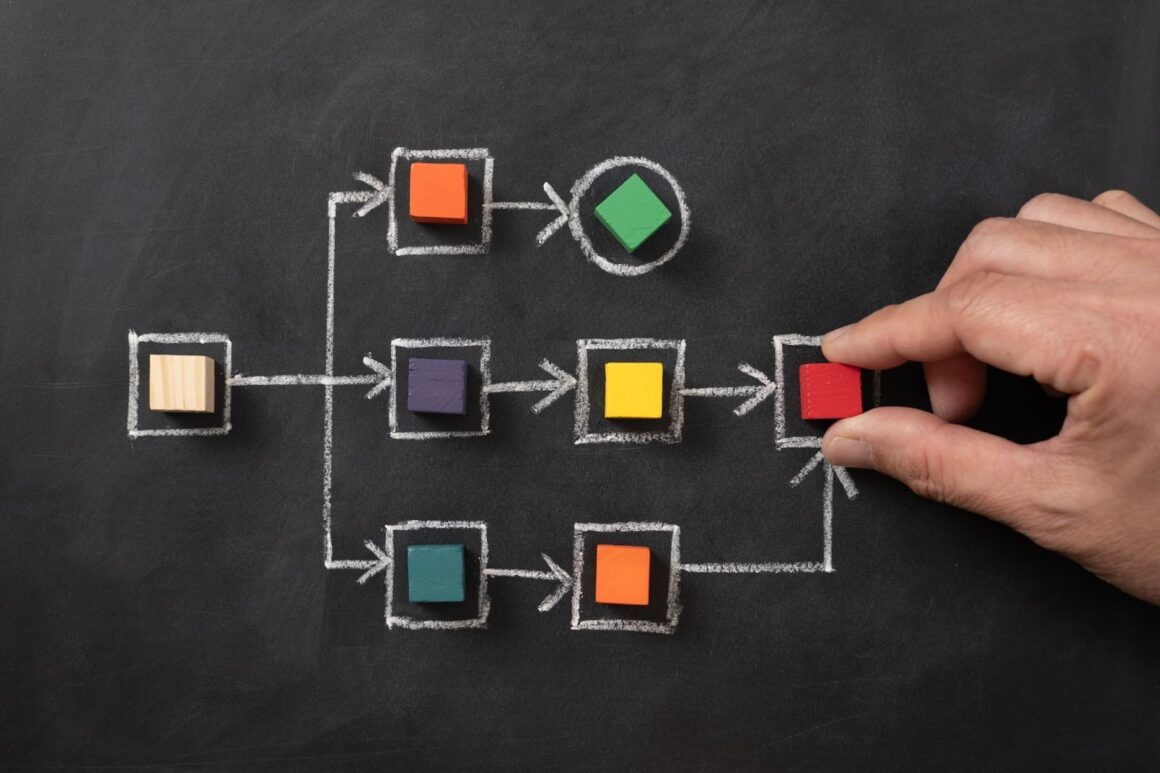

Automation often enters startup environments during periods of operational strain. Teams aim to reduce repeated manual actions, accelerate delivery timelines, and limit human error across routine processes. These goals appear reasonable, yet automation frequently creates confusion instead of improvement. The underlying issue rarely involves software capability. The failure usually stems from unclear responsibility.

In early-stage organizations, work moves through individuals rather than formal departments, and decision authority often overlaps. When automation enters this environment without explicit responsibility mapping, decision ownership becomes harder to trace. High-accountability startup environments treat automation as a responsibility-carrying system, where every automated action remains linked to a person who reviews outcomes and accepts consequences.

Understanding Accountability in Startup Organizations

Accountability within startup organizations operates differently than in large institutions. Smaller teams require clarity without bureaucracy, and systems must reflect how work actually occurs rather than how it appears on paper.

Accountability as Ownership of Outcomes

Accountability refers to the condition where outcomes can be traced back to identifiable decision-makers. When a process produces a result, someone must be able to explain how that result occurred and why a particular decision path was followed. This traceability supports learning, correction, and trust.

In startup environments, accountability does not depend on hierarchy. A junior employee may own a decision, while a founder may act as a reviewer. What matters is that responsibility remains explicit rather than implied.

When accountability is unclear, teams struggle to learn from mistakes. Outcomes appear detached from decisions, and corrective actions become speculative rather than informed.

Why Startup Contexts Increase Accountability Risk

Startup teams often operate with overlapping responsibilities. A single person may design a workflow, execute it, and review its outcome. This structure supports speed, yet it also increases reliance on shared assumptions.

As automation enters these environments, assumptions become encoded into systems. If responsibility remains undocumented, automation spreads ambiguity at scale. Tasks execute reliably, yet teams cannot explain failures without reconstructing decision history manually.

High-accountability automation requires systems that reflect real responsibility structures rather than idealized process diagrams.

The Relationship Between Automation and Accountability

Automation reflects the conditions of the organization in which it operates. It does not resolve unclear responsibility or compensate for missing ownership. Instead, automation increases the speed and scale at which existing practices occur. If decision ownership is well defined, automation strengthens consistency. If ownership is unclear, automation spreads confusion more efficiently.

Clear accountability allows automation to reinforce reliability. Each automated action connects to a responsible owner, and outcomes remain traceable during review. In contrast, automation introduced without defined decision ownership often increases error volume. Actions execute faster, yet teams struggle to identify why failures occurred or who should respond.

Accountability provides the structure that allows teams to learn from automated outcomes. When ownership remains explicit, errors become reviewable events rather than unexplained incidents. Without this structure, automation masks problems by producing results without context.

Trust in automated systems depends on traceability. Team members rely on automation when they understand who monitors behavior, how exceptions are handled, and where responsibility sits. Educational approaches to automation, therefore, treat systems as extensions of human responsibility rather than independent decision-makers.

Preparing Processes Before Automation Design

Automation design should begin with process understanding rather than software selection. Before introducing automated execution, teams need a shared view of how work currently moves, where decisions occur, and who carries responsibility at each stage. Without this groundwork, automation risks encoding confusion instead of resolving it. Preparing processes first allows automation to reinforce accountability instead of obscuring it.

Identifying Tasks Suitable for Automation

Tasks suitable for automation share clear operational characteristics. They follow repeatable rules, produce predictable outputs, and rely on limited contextual interpretation. These qualities allow automated systems to operate consistently without undermining judgment or responsibility.

Examples include scheduled data aggregation, status synchronization, and notification routing. In these cases, consistency matters more than discretion, and automation reduces manual effort without altering decision ownership.

By contrast, tasks involving interpretation, ethical consideration, or irreversible impact require human oversight. Automating these actions without review removes learning opportunities and increases organizational risk. Teams should therefore draw clear boundaries between execution support and decision authority.

Process stability also matters. Workflows that undergo frequent change should remain flexible until patterns stabilize. Encoding unstable assumptions into automation restricts improvement and complicates future adjustments.

Responsibility Mapping as a Design Requirement

Responsibility mapping establishes the foundation for accountable automation. Before automating any process, teams should explicitly identify who initiates actions, who approves decisions, who reviews outcomes, and who responds to failures. This clarity prevents responsibility from dispersing once execution accelerates.

Responsibility mapping often reveals gaps that automation cannot correct. Some steps may lack owners, while others depend on informal knowledge that systems cannot infer. Addressing these issues before automation improves both system reliability and team alignment.

Documented responsibility structures also serve as long-term references. As teams grow and roles change, these records preserve accountability by explaining how ownership was intended to function, preventing gradual erosion over time.

Designing Automation Around Human Decision Boundaries

Automation delivers the most value when it respects the limits of algorithmic execution. Automated systems excel at repeatable actions and rule-based checks, yet they struggle with judgment, context, and consequence evaluation. High-accountability startup environments recognize this distinction and design automation to support human decision-making rather than replace it. This approach protects responsibility, preserves learning, and prevents unintended outcomes.

Identifying Decision Points That Require Human Oversight

Certain decision points require human oversight because their consequences extend beyond technical correctness. Financial approvals affect cash flow and trust. Policy exceptions influence fairness and consistency. Customer-facing changes shape reputation and long-term relationships. These decisions demand accountability that cannot reside entirely within automated logic.

Automation should assist these decisions by preparing relevant information rather than executing actions autonomously. Systems can collect inputs, validate basic conditions, and highlight potential risks. They can summarize history, flag deviations, and present structured recommendations. However, the final decision must remain with a person who understands context and accepts responsibility for the outcome.

Clear decision boundaries protect organizations by preventing silent execution of high-impact actions. When boundaries remain explicit, teams know where automation stops, and judgment begins. This clarity also preserves learning, because humans remain involved in evaluating outcomes and refining assumptions.

Structuring Human Review Within Automated Workflows

Human-in-the-loop automation integrates execution efficiency with contextual review. Automated steps prepare actions consistently, while reviewers evaluate outputs using situational awareness. This structure supports speed without sacrificing accountability.

Well-designed review systems specify who reviews which actions and under what conditions. Review responsibility should align with authority, experience, and availability. When review expectations remain unclear, delays increase, and accountability weakens.

Escalation structures reinforce this design by addressing situations that exceed expected parameters. When automated checks detect anomalies or boundary violations, systems should notify assigned reviewers with sufficient context to act effectively. Notifications must include relevant data, recent history, and the reason for escalation. Alerts that lack context or ownership create noise rather than resolution and undermine trust in automation.

Preserving Explicit Override Authority

Override authority represents a core accountability mechanism. Teams must know who can pause automation, reverse actions, or modify logic when conditions change. This authority should remain explicit, documented, and visible to those interacting with the system.

Documenting override authority serves multiple purposes. It clarifies responsibility during incidents, reduces hesitation during urgent situations, and prevents unauthorized intervention. Clear documentation also supports training and onboarding by explaining how exceptional cases are handled.

Override authority should never remain implicit or assumed. When no one feels empowered to intervene, automation becomes rigid and unsafe. When authority is clear, automation remains flexible and accountable, even under pressure.

Monitoring, Feedback, and Learning Structures

Automation that operates without monitoring creates motion without understanding. Actions occur, tasks close, and notifications fire, yet teams struggle to explain outcomes or correct recurring problems. High-accountability systems avoid this pattern by embedding monitoring and feedback directly into how automated work operates. Monitoring does not exist for control. Monitoring exists to make responsibility visible and improvement possible.

Designing Metrics That Reinforce Responsibility

Metrics shape behavior, so metric design directly affects accountability. Effective metrics connect outcomes to decision ownership instead of summarizing activity volume. Counting completed actions alone rarely explains success or failure. Teams need signals that reveal how responsibility was exercised and where follow-up occurred.

Metrics that reinforce responsibility often include indicators such as:

- Error frequency tied to specific workflows or ownership groups

- Response time measured from alert creation to first meaningful action

- Resolution pathways that show who intervened and how issues were closed

Ownership-linked metrics make responsibility explicit. For example, tracking how quickly assigned reviewers respond to alerts reveals how accountability is distributed across a team. This information supports learning discussions rather than personal judgment, because it shows where systems support or hinder follow-through.

Aggregated averages often conceal problems. When metrics flatten results across many cases, repeated failures disappear into acceptable totals. High-accountability systems surface exceptions intentionally. Exceptions highlight where assumptions fail, where capacity strains appear, and where ownership boundaries need adjustment.

Building Feedback Loops Into Automated Workflows

Feedback loops convert monitoring data into learning. Without structured feedback, metrics accumulate without effect. High-accountability environments schedule regular reviews where automation behavior receives focused attention.

Effective reviews examine several dimensions simultaneously. Teams assess whether automated rules still reflect current responsibilities, identify recurring failure patterns, and evaluate decision boundaries that trigger human review. These discussions work best when supported by concrete records rather than anecdotal recollection.

Feedback must lead to adjustment. As teams grow, responsibilities are redistributed, and workflows expand, automation logic requires revision. Useful adjustments may include:

- Refining escalation timing to match real response capacity

- Updating ownership mapping after organizational changes

- Tightening validation rules after repeated input errors

When feedback connects directly to system updates, teams see automation as adaptable rather than rigid. This perception supports trust and continued engagement.

Encouraging Examination Rather Than Passive Acceptance

Learning depends on examination. Automated systems that discourage questioning weaken accountability by presenting outputs as unquestionable facts. High-accountability environments treat automated results as inputs for judgment rather than final answers.

Systems should encourage examination by making reasoning visible. This includes showing which rules applied, which thresholds triggered alerts, and which data points influenced outcomes. When people understand why a system acted, they can evaluate whether the action aligned with the intent.

Cultural reinforcement matters as well. Teams should view questions about automation as constructive, not disruptive. When someone challenges an automated result, the response should focus on understanding assumptions rather than defending the system.

Automation that invites examination strengthens learning. Automation that resists examination becomes brittle and opaque. High-accountability systems choose the first path deliberately.

Selecting Automation Platforms That Support Accountability

Platform selection directly affects how accountability operates within automated systems. The choice determines whether responsibility remains visible or becomes hidden behind software behavior. In startup environments, platforms must reinforce traceability rather than obscure it, because unclear systems weaken ownership and limit learning.

Platforms that support accountability make actions observable. Users must be able to see what occurred, when it occurred, and who initiated the action. This visibility allows teams to reconstruct events during reviews and understand how outcomes emerged. Action histories should record timestamps, initiating users or systems, applied rules, and resulting changes. When these records remain accessible, accountability remains grounded in evidence rather than memory.

Permission structures also shape accountability outcomes. Effective platforms allow responsibility boundaries to be expressed through access and approval rules. This includes features such as:

- Clearly defined approval roles that match real decision authority

- Adjustable access levels that change as responsibilities shift

- Documented permission logic that explains why certain users can act while others cannot

When permission structures mirror actual responsibility, teams avoid confusion during exceptions and audits. When permissions remain unclear or overly broad, accountability dissolves into shared blame.

Flexibility matters as well. Startup environments change frequently as teams grow, products mature, and responsibilities are redistributed. Platforms that allow iterative adjustment support this reality by letting teams revise routing logic, approval paths, and thresholds without rebuilding entire systems. Useful platforms allow updates such as:

- Modifying escalation timing without rewriting workflows

- Adjusting ownership mapping after team changes

- Refining validation rules as inputs stabilize

By contrast, platforms that hide logic create understanding gaps. When teams cannot see how a system reached a decision, trust deteriorates. People hesitate to rely on outputs they cannot explain, and reviews become speculative rather than analytical. Hidden logic also prevents improvement, because teams cannot identify which assumption caused a failure.

Rigid platforms introduce another risk. When systems resist adjustment, teams often create parallel processes outside the platform. These workarounds may include private spreadsheets, manual approvals through chat messages, or undocumented side agreements. Such parallel activity fragments accountability and weakens learning, because outcomes no longer trace back to a single system of record.

Choosing platforms that prioritize transparency, adjustable responsibility boundaries, and visible action histories helps maintain accountability over time. These qualities keep automation aligned with real work and allow teams to learn from outcomes instead of guessing at causes.

Common Automation Failures in Startup Environments

Automation introduces predictable risks when applied without preparation. Understanding these risks helps teams avoid repeating common mistakes.

Automating Unstable Workflows

Automation introduces predictable risks when applied without sufficient preparation. These failures rarely stem from software limitations. They usually result from unclear responsibility, unstable processes, or neglected ownership as systems age.

1. Automating Unstable Workflows

Automating immature workflows spreads confusion at scale. When steps, ownership, or decision authority remain unclear, automation multiplies inefficiency instead of resolving it. Examples include automating onboarding before eligibility criteria settle, automating approvals without agreement on authority, or automating reporting on inconsistent data inputs. Automation should reinforce existing clarity rather than compensate for its absence.

2. Encoding Unexamined Assumptions

Automation often embeds assumptions that teams never formalized. Hardcoded thresholds, escalation timing, or approval logic may reflect past conditions that no longer apply. Once embedded, these assumptions resist scrutiny and produce misleading outcomes. Teams must surface and document assumptions before turning them into rules.

3. Accountability Erosion Over Time

As teams grow, automation ownership may fade. Original designers leave, and new team members inherit systems without context. This erosion appears through unassigned alerts, outdated logic, and unclear authority to make changes. Named system owners and active documentation prevent this decay.

4. Parallel Processes Outside the System

Rigid automation encourages workarounds such as private approvals, side spreadsheets, or informal overrides. These parallel processes fragment accountability and hide learning. High-accountability environments require decisions and corrections to remain visible within one system.

Sustaining Accountability as Automation Expands

As automation expands within a startup, the nature of work changes in measurable ways. Higher transaction volume increases the number of automated actions, exceptions, and review events that flow through systems each day. This change places new demands on responsibility structures that were originally designed for smaller teams and lower activity levels.

Responsibility assignments must therefore be revisited as automation grows. Ownership that worked when a single person could review every exception may become unrealistic as volume increases. Reassessment allows teams to redistribute review duties, clarify escalation paths, and adjust decision boundaries so accountability remains practical rather than symbolic.

Documentation plays a central educational role during expansion. Clear explanations of how automated processes function, which assumptions guide them, and who owns outcomes help new team members understand responsibility expectations quickly. Without this guidance, accountability weakens as knowledge becomes unevenly distributed.

Automation should also remain visible through planned reviews. Scheduled evaluations allow teams to examine system behavior, confirm alignment with current work patterns, and adjust oversight structures before failures accumulate. Regular visibility keeps automation connected to human judgment rather than drifting into unattended infrastructure.

Conclusion

Automation in startup environments works best when treated as a system that carries accountability instead of removing it. High-accountability organizations design automation so responsibility remains visible, traceable, and open to review. Effective design requires early preparation, including responsibility mapping, clear decision boundaries, careful platform selection, and regular evaluation. Automation does not remove human responsibility. It redistributes attention. When built thoughtfully, it supports learning, correction, and trust as organizations grow and operations expand.

Post Comment